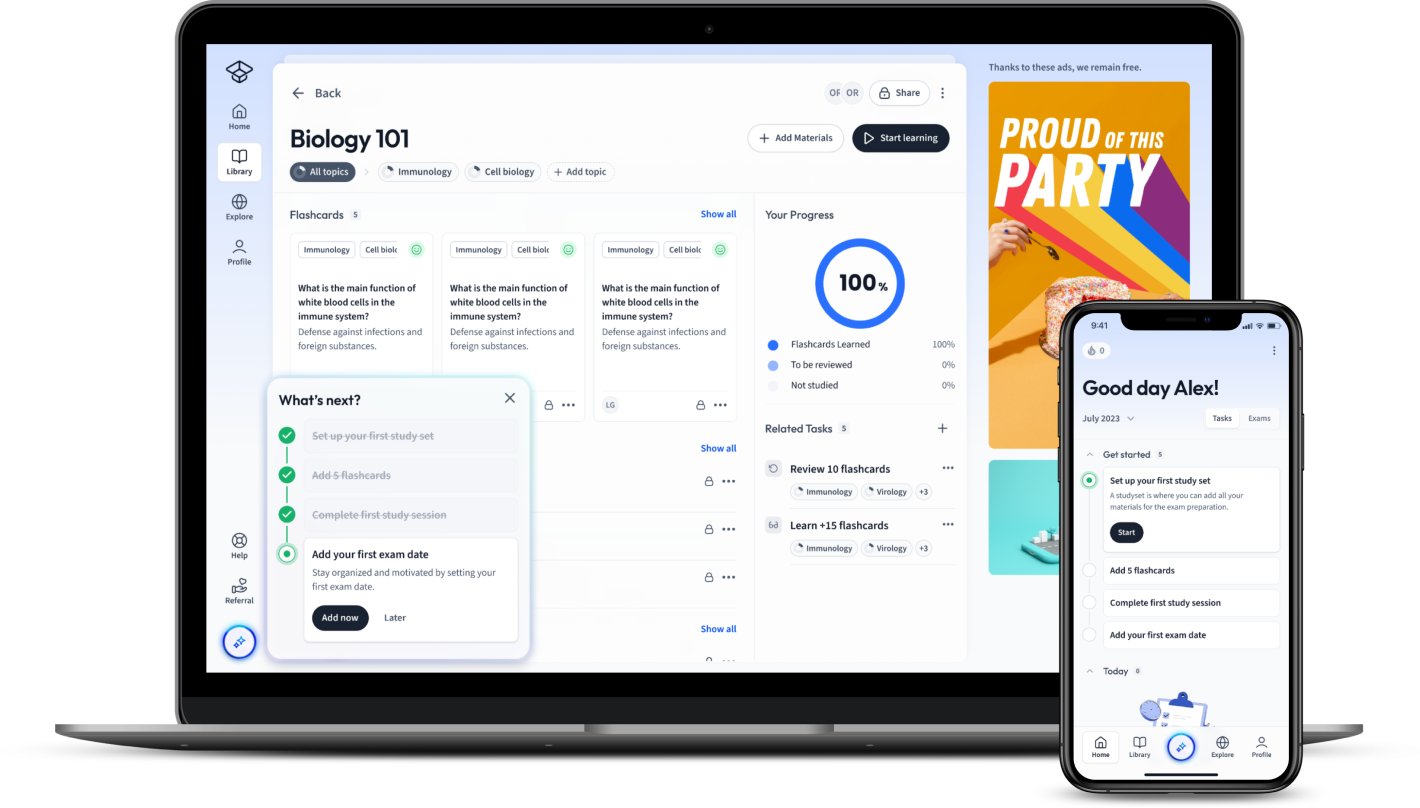

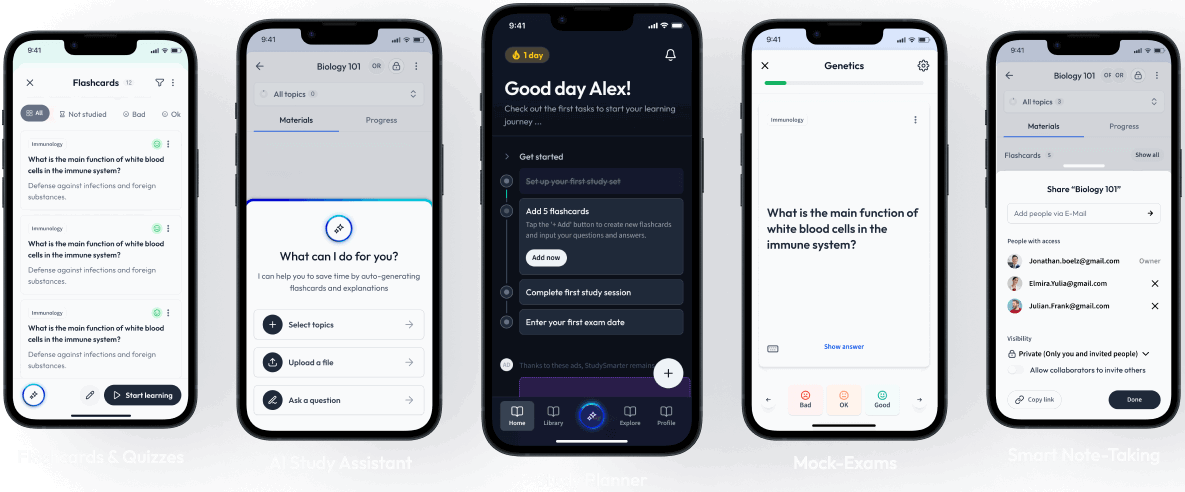

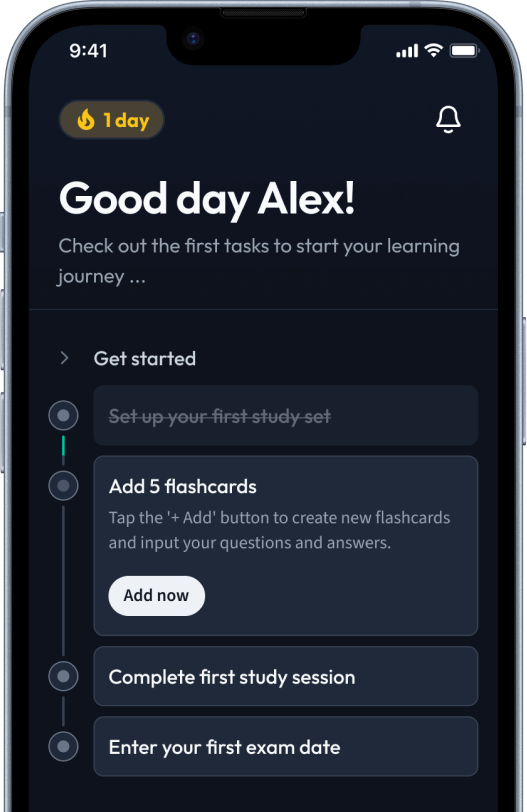

StudySmarter - The all-in-one study app.

4.8 • +11k Ratings

More than 3 Million Downloads

Free

Americas

Europe

In the digital age, you're inundated with an immense amount of information every day. This is where the concept of Big Data comes into play. Big Data allows you to understand and analyse large volumes of data that are beyond the capacity of traditional databases. This guide will delve into the intricacies of Big Data, shedding light on its meaning and historical context. You will also get acquainted with the innovative tools and technologies developed to manage Big Data.

Lerne mit deinen Freunden und bleibe auf dem richtigen Kurs mit deinen persönlichen Lernstatistiken

Jetzt kostenlos anmeldenIn the digital age, you're inundated with an immense amount of information every day. This is where the concept of Big Data comes into play. Big Data allows you to understand and analyse large volumes of data that are beyond the capacity of traditional databases. This guide will delve into the intricacies of Big Data, shedding light on its meaning and historical context. You will also get acquainted with the innovative tools and technologies developed to manage Big Data.

The role of a Big Data engineer is crucial in this realm; therefore, exploring what they do and the skills they possess for achieving success in their field is indispensable. Big Data analytics is another facet you will be exploring, understanding how it works and its diverse applications.

Lastly, you need to comprehend the 4 Vs of Big Data - volume, variety, velocity, and veracity, their significance in Big Data management and why they're integral to harnessing the potential of Big Data. Long story short, this guide is your gateway to acquiring an in-depth understanding of Big Data and its profound impact in the modern world.

Big Data is a popular concept in the world of technology and computer science. Essentially, Big Data refers to a wealth of information so vast in volume, variety and velocity that conventional data handling methods fall short. To truly appreciate what Big Data entails, it's essential to understand its history, key characteristics, sources, and real-world applications, among other elements.

While there isn't a set-in-stone definition, Big Data generally refers to datasets that are so large or complex that traditional data processing application software is inadequate to handle.

The term Big Data transcends the mere size or volume of datasets. Notwithstanding, there are three key characteristics (or Vs) often associated with Big Data:

However, as big data continues to evolve, additional Vs have emerged such as Veracity, Value, and Variability. These represent the truthfulness of the data, the usefulness of the extracted information, and the inconsistency of the data over time, respectively.

We owe the term 'Big Data' to an article published by Erik Larson in the 'American Scientist' back in 1989. However, the importance of large-scale data gathering precedes even this. For example, back in 1880, the U.S. Census Bureau developed a punch card system to manage vast amounts of information.

Historically, Big Data refers to the idea of handling and making sense of vast amounts of information. In essence, it's about collecting, storing, and processing the ever-growing sea of data generated by digital technologies.

Consider Google, the world’s most dominant search engine. They process over 3.5 billion requests per day. Traditional data processing systems would falter under such immense data pressure. Hence, they rely on Big Data technologies to store and interpret these vast quantities of search data.

Research firm IDC has predicted that by 2025, there will be around 175 zettabytes of data in the world. This astronomical amount of data emphasizes the ever-increasing importance and relevance of Big Data. Being equipped with a strong understanding and ability to work with Big Data will continue to be a crucial skill in the technology and computer science fields.

Big Data processing is virtually impossible with traditional means. Therefore, numerous tools and technologies have been developed to handle the volume, velocity, and variety associated with it. These tools aim at extracting meaningful insights, ensure accuracy, and add value to businesses or research. Learn more about the types of Big Data technologies, how they work, and examples of how they’re used in various sectors.

Several tools and technologies are constantly being innovated to help efficiently navigate the unpredictable waters of Big Data. Ranging from software platforms to data mining tools to cloud-based solutions, these technologies exhibit diverse capabilities for different stages of the Big Data life cycle.

Below is a list of some of the widely used Big Data technologies:

| Technology | Use |

|---|---|

| Apache Hadoop | Distributed processing of large data sets across clusters of computers |

| Apache Spark | Speed up data processing tasks |

| NoSQL Databases | Manage large amounts of non-relational data |

| Machine Learning Platforms | Automate analytical model-building |

Big Data technologies represent the collection of software utilities, frameworks, and hardware devices one can utilize to capture, store, manage, and perform complex queries on large sets of data.

Big Data technologies are transforming various sectors including healthcare, education, e-commerce, finance, and so on. Understanding practical applications can shed more light on their relevance and potentials.

An excellent example of a company utilizing Big Data technologies is Amazon, the retail giant. Amazon leverages the power of Big Data technologies to analyse the preferences, purchasing behaviour and interests of its customers to personalize recommendations. Amazon also uses Big Data for demand forecasting, price optimization, improving logistics etc.

If we look at the healthcare sector, Big Data technologies are used for predictive analytics to improve patient care. For instance, Google's DeepMind Health project collects data from patients to help health professionals predict illness and prescribe interventions in the early stages for better patient outcomes.

Banks and financial institutions tap into Big Data technologies to detect fraudulent transactions in real-time. For instance, machine learning algorithms can learn a pattern of a user's spending and flag any unusual transaction.

Such examples are increasingly becoming commonplace as businesses acknowledge the abundance and relevance of Big Data and the technologies designed to handle it.

A Big Data Engineer plays an indispensable role in dealing with the complexities of Big Data. Being a pivotal figure in data-driven businesses, the Big Data Engineer designs, constructs, tests, and maintains architectures such as large-scale processing systems and databases. Understanding this role, its associated responsibilities, and required skills would provide an insight into the world of Big Data.

Big Data Engineers are the masterminds constructing the systems responsible for gathering, organising, and analysing data. Their crucial role is often underestimated and misunderstood despite its significant importance to businesses in diverse sectors.

A plethora of activities materialise in the day-to-day task list of a Big Data engineer. Key tasks usually include:

Automation is another key aspect of their work. A Big Data Engineer will create automated methods for collecting and preparing immense amounts of data for analysis. Moreover, a Big Data Engineer is responsible for delivering operational excellence, i.e., maintaining system health, ensuring data integrity and system security.

A Big Data Engineer is a professional who develops, maintains, tests and evaluates Big Data solutions within organisations. They are responsible for designing, developing, testing, and maintaining highly scalable data management systems.

Investing in these professionals is crucial for organisations seeking to get the upper hand in their sectors, as engineers play a huge part in transforming Big Data into actionable insights.

Consider a large multinational corporation that handles millions of customer transactions. The Big Data Engineer is tasked with designing a system capable of processing, storing, and analysing these transactions in real-time to provide valuable insights for the management. These insights can then be used to make informed decisions such as targeting marketing campaigns or identifying potential areas for business expansion.

The work of Big Data Engineers extends beyond developing and maintaining systems. They are expected to stay on top of emerging industry trends, technologies and can influence the architecture of future tools, promoting better data handling, extraction and storage techniques. This involves attending seminars and conferences, engaging industry publications and even pursuing advanced certification in areas such as machine learning and cloud computing.

Big Data Engineers need a portfolio of technical and non-technical skills to manoeuvre the complexities of building and maintaining Big Data systems. Here, we list both fundamental and desirable skills that can contribute to a thriving career as a Big Data Engineer.

Below are the fundamental must-have skills:

Apart from these, some technical skills specific to Big Data technologies are also beneficial. They include:

In addition to technical skills, some notable non-technical skills are:

Learning these skills might seem an intimidating task, but a keen interest in data, coupled with the right educational and learning resources, can help pave the way to becoming a successful Big Data Engineer.

A successful Big Data Engineer hones a unique combination of technical, analytical, and soft skills. These help in designing, constructing and maintaining Big Data infrastructure, transforming complex data into comprehensible insights, and collaborating effectively with teams. Alongside, keeping up-to-date with the evolving technologies is a requisite.

Consider the scenario when a financial firm is looking to understand customer behaviour in order to optimise their product offerings. Here, a Big Data Engineer leverages his technical skills to design a system capable of handling the development and maintenance of Big Data. Further, using their problem-solving skills, he or she can navigate challenges emerged during the process. Their communication skills come into play when translating the gathered insights into actionable strategies for the marketing and product teams.

Furthermore, staying updated with evolving trends and technologies boosts their capabilities. For example, if a new data processing framework presents itself in the market promising better efficiency or ease of use, a well-informed Big Data Engineer can evaluate its feasibility for the firm’s current architecture and potentially integrate it to achieve improved performance.

Big Data Analytics involves the process of examining large datasets to uncover hidden patterns, correlations, market trends, customer preferences, or other useful business information. This information can be analysed for insights that lead to better decisions and strategic business moves. It's the foundation for machine learning and data driven decision-making, both of which have a significant impact on the world of business and research.

Big Data Analytics is a complex process that often involves various stages. At the most basic level, it entails gathering the data, processing it, and then analysing the results.

The first step involves data mining, the process of extracting useful data from larger datasets. This involves a combination of statistical analysis, machine learning and database technology to delve into large volumes of data and extract trends, patterns, and insights. The extracted data is usually unstructured and requires cleansing, manipulation, or segregation to prepare it for the processing phase.

In the processing phase, the prepared data is processed using different techniques depending on the type of analysis required - real time or batch processing. Real time processing is generally used for time-sensitive data where instant insights are needed. Batch processing involves dealing with huge volumes of stored data, and the processing is usually scheduled during off-peak business hours.

Batch processing is a method of running high-volume, repetitive data jobs. The batch method allows for job execution without human intervention, with the help of a batch window that is usually a set period when system usage is low.

Real-time processing is the processing of data as soon as it enters the system. It requires a high-speed, high-capacity infrastructure, as it is typically used for resource-intensive, complex computations and real-time reporting.

The tools and technologies used at this stage are usually Big Data processing frameworks like Apache Hadoop, Apache Spark etc. Hadoop, for example, uses the MapReduce algorithm where the dataset is divided into smaller parts and processed simultaneously. This type of processing is known as parallel processing.

Once the data is processed, data scientists or data analysts perform the actual data analysis. This could be descriptive analysis, diagnostic analysis, predictive analysis, or prescriptive analysis.

Upon completion of the analysis, the results need to be visualised and the insights communicated to the stakeholders to facilitate informed and data-driven decision making.

For instance, in a sales department trying to boost profits, Big Data analytics might reveal that sales are higher in certain geographical areas. This insight could lead decision-makers to focus marketing efforts in those areas, tailoring strategies to expand customer base, resulting in increased sales and overall profitability.

Big Data Analytics is transforming the way businesses and organisations operate, powering decision-making with data-driven insights. Its use cases are extensive and span multiple sectors. Here, three illustrative examples demonstrate how Big Data Analytics make a significant impact.

The first example comes from the retail sector. E-commerce platforms like Amazon utilise Big Data Analytics to understand the purchasing habits of their customers, which enables personalisation of their online shopping experience. By analysing browsing and purchasing patterns, Amazon can recommend products targeted at individual customer preferences, contributing to an increase in sales and customer satisfaction.

In the healthcare sector, Big Data Analytics is used to predict epidemic outbreaks, improve treatments, and provide a better understanding of diseases. For instance, Google Flu Trends project attempted predicting flu outbreaks based on searches related to flu and its symptoms. Although the project was discontinued, it highlighted the potential of Big Data Analytics in forecasting disease outbreaks.

In the field of finance, banking institutions are using Big Data Analytics for fraud detection. By analysing past spending behaviours and patterns, machine learning algorithms can identify unusual transactions and flag them in real-time for investigation, reducing the risk of financial fraud.

Moreover, Big Data Analytics plays a pivotal role in enhancing cybersecurity. By analysing historical data on cyber-attacks, security systems can predict and identify potential vulnerabilities and mitigate risks proactively, thereby enhancing the overall security of networks and systems.

These use cases illustrate how Big Data Analytics exploits reserves of complex, unstructured data for valuable insights, translating into data-driven decision-making across varied sectors.

At the heart of understanding Big Data, you will often come across the concept referred to as the '4 Vs'. To truly get to grips with big data, it is crucial to understand these four key characteristics commonly associated with it: Volume, Velocity, Variety, and Veracity. Understanding the 4 Vs will provide a comprehensive overview of the complexities inherent in managing Big Data, and why it's significant in the landscape of data management.

Big Data is usually described by four main characteristics or Vs: Volume, Velocity, Variety, and Veracity. Let's delve deeper into each of them.

Volume is the V most commonly associated with Big Data and it refers to the sheer size of the data that is produced. Its immense size is what makes it 'Big' Data. The volume of data being produced nowadays is measured in zettabytes (1 zettabyte = 1 billion terabytes). The development of the internet, smartphones, and IoT technologies have led to an exponential rise in data volume.

Velocity pertains to the pace at which new data is generated. With the advent of real-time applications and streaming services, data velocity has gained more focus. The faster the data is produced and processed, the more valuable the insight. In particular, real-time information can be invaluable for time-sensitive applications.

Variety is concerned with the diversity of data types. Data can be structured, semi-structured or unstructured, and the increase in variety of data sources has been remarkable. Data variety includes everything from structured numeric data in traditional databases to unstructured text documents, emails, videos, audios, stock ticker data and financial transactions.

Veracity refers to the quality or trustworthiness of the data captured. As data volume grows, so can inconsistencies, ambiguities and data quality mismatches. Managing data veracity is challenging, considering the varied data sources and types. Refining this data to obtain clean and accurate datasets for analysis is a milestone.

These 4 Vs clearly represent the fundamental characteristics of Big Data. Below is a tabular summary:

| V | Description |

|---|---|

| Volume | Massive quantity of generated data |

| Velocity | Speed of data generation and processing |

| Variety | Types and sources of data |

| Veracity | Quality and trustworthiness of data |

The 4 Vs of Big Data refer to Volume, Velocity, Variety and Veracity, which are the key characteristics defining the challenges and opportunities inherent in Big Data. These characteristics also represent the critical parameters one must take into account while dealing with Big Data tools and technologies.

Understanding the 4 Vs is imperative to grasp the challenges associated with Big Data management. These 4 Vs are interconnected, and their management plays a critical role in extracting valuable insights from raw data.

For instance, high 'Volume' makes storage a challenge, especially at high 'Velocity'. Massive data 'Variety', from structured to unstructured, augments the complexity of processing and analysis. 'Veracity' ensures that the data used for processing and analysis is credible and reliable.

Effective Big Data management requires strategies that can handle all 4 Vs efficiently. Managing the volume requires an efficient storage solution that doesn't compromise on processing power. To deal with velocity, a flexible infrastructure capable of processing data in real-time is required. Increased variety calls for sophisticated data processing and management methodologies that can handle unstructured data. As for veracity, data cleaning and filtering techniques are critical to eliminate noise and error from data.

Big Data management involves handling the 4 Vs effectively to turn meaningless data into valuable insights. Successful data strategies must tackle high volume, manage high velocity, process a variety of data types, and verify the veracity of datasets. Each of these Vs poses unique challenges, and their efficient management is crucial to tap into the potential of Big Data.

Let's say an e-commerce company wants to implement a recommendation engine. To do so, they need to analyse their user behaviour data. This data will likely be at a high Volume, given the number of users and transactions. The data will also have high Velocity as user interactions are continuously logged in real-time. The Variety will come from the different types of data sources - user clicks, cart history, transaction history etc. Veracity becomes important, as it’s necessary to ensure the data being analysed is accurate and reliable.

Having a firm grasp of these 4 Vs and the interplay between them can help understand not just how to deal with the Big Data deluge but also how to exploit it for business advantage. Allowing them to serve their customers better, optimise their operations, create new revenue streams and stay ahead of the competition.

Big data refers to extremely large data sets that may be analysed to reveal patterns, trends, and associations, especially relating to human behaviour and interactions. This can include data sets with sizes beyond the ability of traditional software tools to capture, manage, and process within a tolerable elapsed time. Big data has three characteristics: volume (amount of data), velocity (speed of data in and out), and variety (range of data types and sources). It's often used in fields such as healthcare, business and marketing, with the aim of improving services and performance.

Big data analytics refers to the process of examining large sets of data to uncover hidden patterns, correlations, market trends, customer preferences, and other useful information. It utilises sophisticated software applications to assist with the analytical processes. This can help organisations make more informed decisions, improve operational efficiency and gain a competitive edge in the market. It encompasses various forms of analytics from predictive and prescriptive to descriptive and diagnostics analytics.

Big data examples include the vast amount of information collected by social networks like Facebook or Twitter, data from e-commerce sites like Amazon on customer purchases and preferences, healthcare records of millions of patients, and data collected by sensors in smart devices or Internet of Things (IoT) devices. Other examples include real-time traffic information from GPS providers, logs from call details in telecommunications, and stock exchange information tracking every transaction globally.

Big data technology refers to the software tools, frameworks, and practices employed to handle and analyse large amounts of data. These technologies include databases, servers, and software applications that enable storage, retrieval, processing, and analysis of big data. They can process structured and unstructured data effectively, and aid in making data-driven decisions. Examples of such technology include Hadoop, NoSQL databases, and Apache Flink.

Big data is used for analysing complex and large volume of data to extract valuable information such as patterns, trends and associations. It is widely used in various industries like healthcare for disease predictions and research, in finance to detect fraudulent transactions, in marketing to understand customer preferences and behaviours, and in sports for player performance analysis and game strategy planning. It also aids in improving business efficiencies, decision making processes, and identifying new opportunities. Moreover, it plays a crucial role in artificial intelligence and machine learning developments.

Flashcards in Big Data384

Start learningWhat are the three key characteristics, also known as Vs, of Big Data?

Volume (size of data), Velocity (speed of data generation and processing) and Variety (diverse forms of data).

What does Big Data refer to according to the given section?

Big Data refers to the large or complex datasets that traditional data processing application software cannot adequately handle.

What is the historical origin of the term 'Big Data'?

The term 'Big Data' originated from an article published by Erik Larson in 'American Scientist' in 1989.

What is Apache Hadoop and what is its use in big data technologies?

Apache Hadoop is an open-source framework that allows distributed processing of large datasets across clusters of computers. It is used for managing and processing large volumes of data.

What are some sectors that utilize Big Data technologies?

Sectors such as healthcare, education, e-commerce, and finance utilize Big Data technologies for various applications like predicting illness in healthcare or detecting fraudulent transactions in the financial sector.

What do big data technologies represent?

Big Data technologies represent the collection of software utilities, frameworks, and hardware devices utilised to capture, store, manage, and perform complex queries on large sets of data.

Already have an account? Log in

Open in AppThe first learning app that truly has everything you need to ace your exams in one place

Sign up to highlight and take notes. It’s 100% free.

Save explanations to your personalised space and access them anytime, anywhere!

Sign up with Email Sign up with AppleBy signing up, you agree to the Terms and Conditions and the Privacy Policy of StudySmarter.

Already have an account? Log in